Something had been playing on my mind since I joined IBM a little over 2 years ago. My job is to help my customers buy a combination of IBM software, hardware and services. Some describe these as ‘solutions’, ‘innovations’ or ‘outcomes’. I like to think of them as insurance. We all need a sales angle (wink-smiley face) and right now, that is mine.

However, until recently, I had never actually seen the insurance I sell in operation in a customer’s environment.

So, last week, I asked a client if I could come and visit their datacentre. He told me no other salesperson had ever requested that, leaving me thinking I had uncovered a point of differentiation (see one my previous posts). A meeting request promptly hit my inbox.

5 days later, I watched through the windscreen of my 1971 Alfa Romeo coupe (which I took out for this special occasion) a very solid looking gate slowly sliding from right to left, allowing me to enter the facility and drive to a parking marked ‘Visitors’.

Eventually finding my way to the security reception building, I presented my IBM ID and driver’s licence, filled in many forms and read a 15-page induction document. The security guard then asked, “Did you read it properly?”. So, I read it again.

In time, my identity cards were returned (with the addition of a coloured label marked ‘Inducted’ and the date. I was also issued with a visitor pass.

My client and host for the day led me through a labyrinth of doorways, hallways, suspended walkways and stairwells. We were on our way to join a half dozen guys (client and IBM staff), who would be my tour guides. I suspect they don’t get many people asking for a tour and were determined to make my visit memorable. Spoiler – they absolutely succeeded.

Every few seconds, my customer would bark a command in my direction.

“You have to tap EVERY card reader.

This one you need to tap and enter this PIN.

Look at the camera up there and tap your card here.

You forgot to tap that one.

Why did you wear a suit?

You forgot to tap again”.

At one point during our hike, he gestured towards a head jamb above one set of doors which featured some very obvious physical damage. Numerous deep scratches, chips and missing paint. He explained with an element of pride, “Every mainframe we every bought through here left its mark on this doorway”.

So far, this was turning out to be an entertaining new experience – and I had not yet seen a piece of hardware.

Please allow me to provide some context. My first job after graduation was with Canon. I was a photocopier engineer and I enjoyed that role for 10 years. If you were someone who loved Meccano as a kid, being a copier tech in the 1990’s was amazing. In this datacentre, I really wanted to see hardware, lights and things that go beep. Hollywood style.

Four rooms, across two floors of data centre, were to be my treat this day. I was shown diesel gulping industrial power generation installations and massive water supply and air-conditioning systems. If (when) war breaks out, I know where I will be heading.

The client and IBM teams proudly led me past seemingly endless racks of hardware. My attention was drawn to power cables as thick as my forearm and slim, elegant, fibre optic cables which as far as the throughput of data is concerned, punch well above their negligible weight.

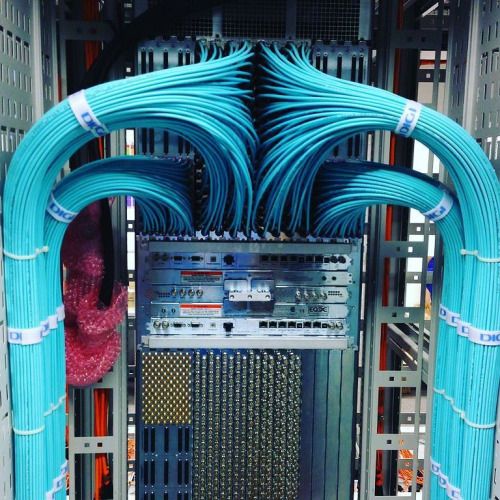

The amount of network cabling was completely mind-boggling. No matter if out of sight  (underfloor) or in full view (overhead), all of it was fastidiously labeled and routed – by hand. I have a new found understanding and respect for the people who practice this art. I now understand why data centre cabling is so expensive.

(underfloor) or in full view (overhead), all of it was fastidiously labeled and routed – by hand. I have a new found understanding and respect for the people who practice this art. I now understand why data centre cabling is so expensive.

One IBMer opened a the bizarrely sculptured doors of one mainframe and provided an explanation of it’s contents. He described the various components and redundancy features of the Z System. All I really noticed was how clean and uncluttered the ‘box’ was, considering the crucial role it plays within this computing environment.

Subsequently, I was shown the powerful beasts known as mid-range IBM Power systems, countless racks of x86 servers, massive data storage boxes, robotic tape backup systems (I saw one of these ‘move’, which I was told is a rare treat) and loads of other kit.

Ok, anyone who reads my posts will know I have a tendency for verbal diarrhea. So, I will try to keep the following brief.

This client has millions of customers and the services they provide are primarily provided via the use of technology (and great customer service). The heart of this client’s technology infrastructure is the IBM Z – the mainframe – Big Iron. Accidentally kick the plug delivering power to the Z and there is no company. Period. Hundreds of millions of dollars of technology situated in this datacentre would become pointless without the mainframe. Remove the heart and the body cannot function.

These IBM Z behemoths, processing billions of dollars’ worth of transactions per day, were doing their job while emitting nothing more than a pleasant warm breeze. Less heat than some photocopiers I worked on all those years ago.

It was the lack of heat which stuck in my mind as the tour continued.

Every other type of server in this datacentre was generating significantly more heat, placing great demands on air-conditioning systems. Yet, at the same time, the mainframes occupied a 100-fold lesser amount of floor space and required considerably fewer propeller heads (no offence intended) to maintain.

The mainframe (and mid-range IBM Power systems) were happily snuggled together. Side-by-side like intimate lovers. Other server racks were installed with a 50cm air-gap between each of them. To me, that looked awful and seemed an inefficient use of floor space.

‘Why are those racks installed that way?’, I asked. “To help with cooling”, my guide replied.

Imagine for a moment, how much energy is consumed just to keep a data centre cool.

I am not saying for a moment there is something wrong with non-IBM systems. Far from it. Almost everything I saw in that building was state-of-the-art. As computers become increasingly powerful, heat management remains an ever-present challenge for engineers to overcome.

I am not saying for a moment there is something wrong with non-IBM systems. Far from it. Almost everything I saw in that building was state-of-the-art. As computers become increasingly powerful, heat management remains an ever-present challenge for engineers to overcome.

As I pondered my observations, the penny dropped – the heart of this business was not just exceptionally resilient and secure, but also rather nice for our environment.

The other thing I thought was ‘IBM’s marketing team needs a good kick in the arse, or an increased budget’.

There were hundreds of energy consuming servers in that building, providing key computing services, which run perfectly well on the mainframe. I mean, on ONE mainframe! Especially those running on Linux.

Food for thought.

Thank you very much for reading and all the fish.

The views and opinions expressed in this article are solely those of the original author and other contributors. These views and opinions do not represent those of IBM (International Business Machines).

#technology #infrastructurematters #IBMZ